OBJECTIVE

Sense represents the first Artificial Intelligence solution for finding patterns and providing personalized feedback in student submissions using unsupervised learning and natural language processing.

This hybrid human-machine intelligence solution drastically reduces grading time, provides greater accuracy and equity in grading, and allows for more personalized and timely feedback to students.

As the Product Designer and Strategy consultant, I was tasked with improving upon the current user experience and interface design within a tight timeline of 3 months.

RESULTS

An enhanced Minimum Viable Product (MVP) was created for the Sense platform, focusing on improving both the instructor and student experiences.

For instructors, the new platform offers an intuitive experience that seamlessly guides them through the process of evaluating and providing feedback on student submissions. The Dashboard provides comprehensive statistics on student submissions, along with in-depth analysis.

For students, Sense provides timely and encouraging hints and feedback at every stage of the assignment. Results are clearly communicated to help students understand how they performed at each stage leading to the final score. The dashboard gives a quick view of their assignment and monitors their progress throughout the semester.

HOW IT WORKS

THE USERS

The Sense users are instructors (professors / teaching assistants) and their students.

We started with Computer Science education as a testing ground. Once proven effective in this domain, the Sense platform can be adapted to other subjects with open-ended assignments.

This strategic decision to focus initially on Computer Science was guided by several key factors:

Open-Ended Assignments

The field often requires students to complete open-ended assignments that can have a multitude of correct solutions or approaches.Data-Driven Feedback

Computer Science lends itself to data-driven feedback. Sense identifies patterns of errors and offering targeted suggestions for improvement.Volume of Submissions

Computer Science courses have a high enrollment with a high number of assignments to grade.

Sense expedites the review process, allowing educators to manage a higher volume of students and allows them to work more efficiently.Adaptive Learning

Sense uses AI to help create adaptive learning paths by identifying areas where a student may be struggling and providing customized resources or exercises.

ORIGINAL DESIGN

The original application was developed by engineers and had a very basic design, with a focus on functionality rather than the user experience. It was built solely for the instructor with no consideration for the student’s experience.

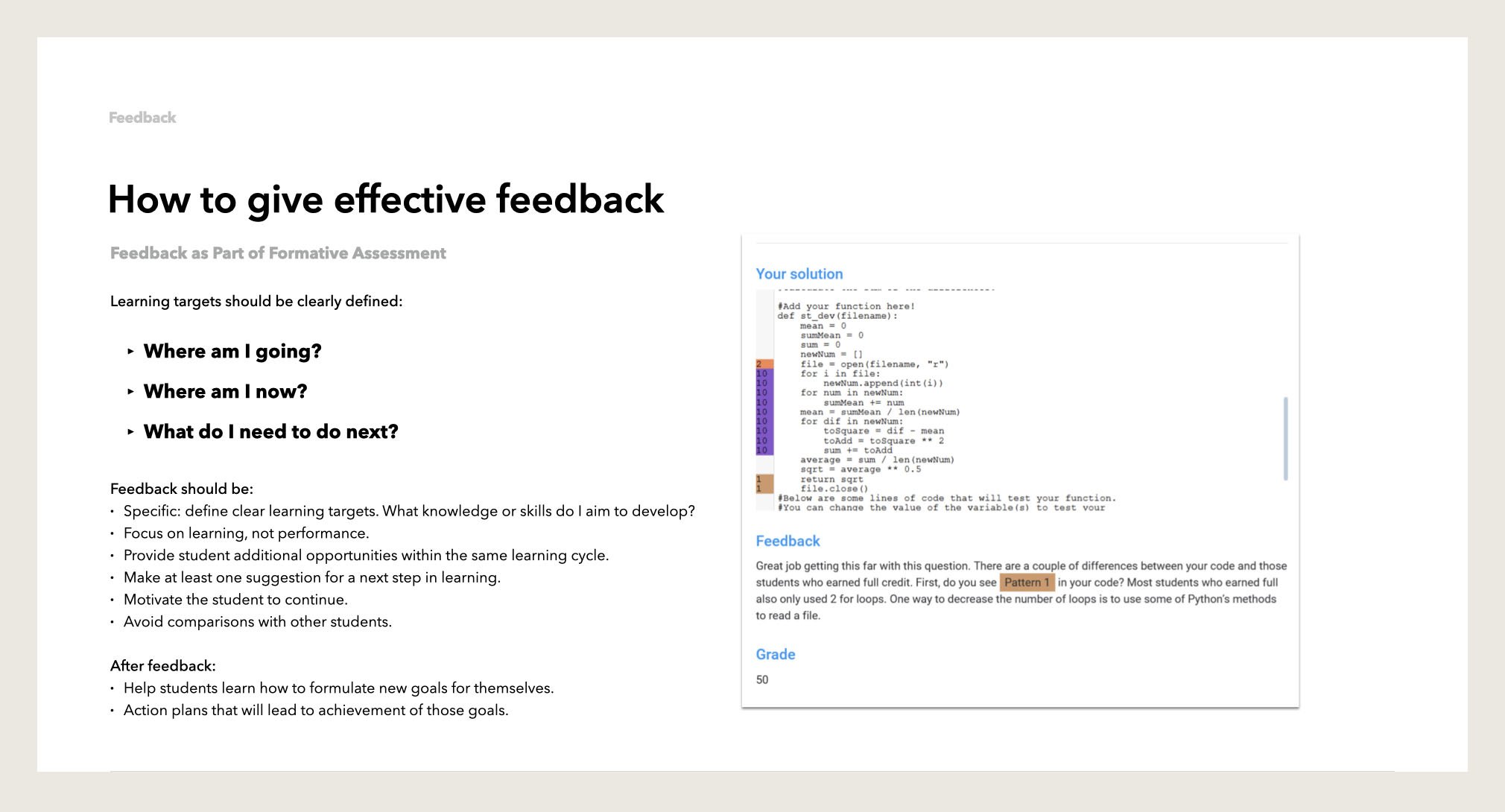

RESEARCH: Student Feedback

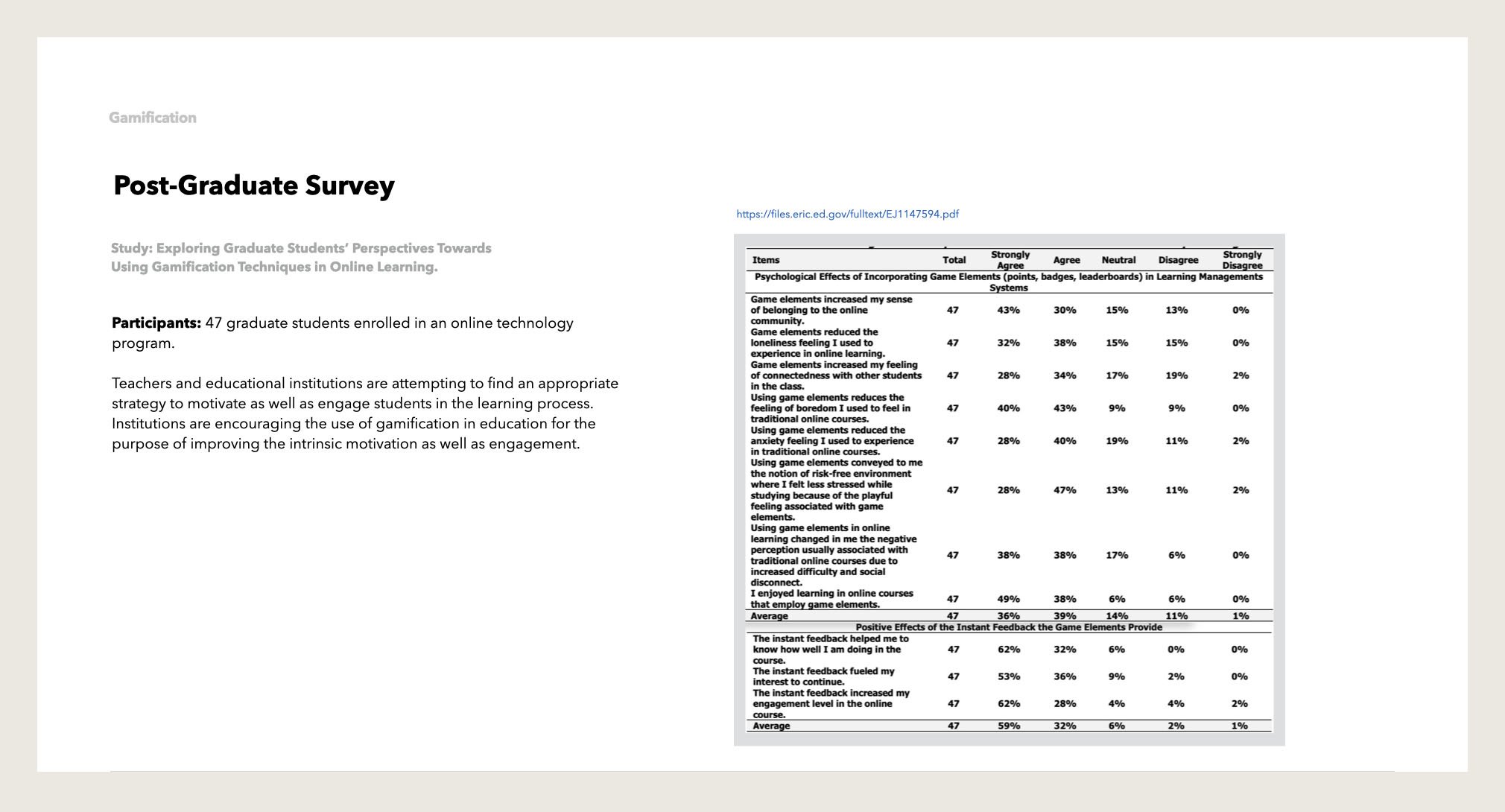

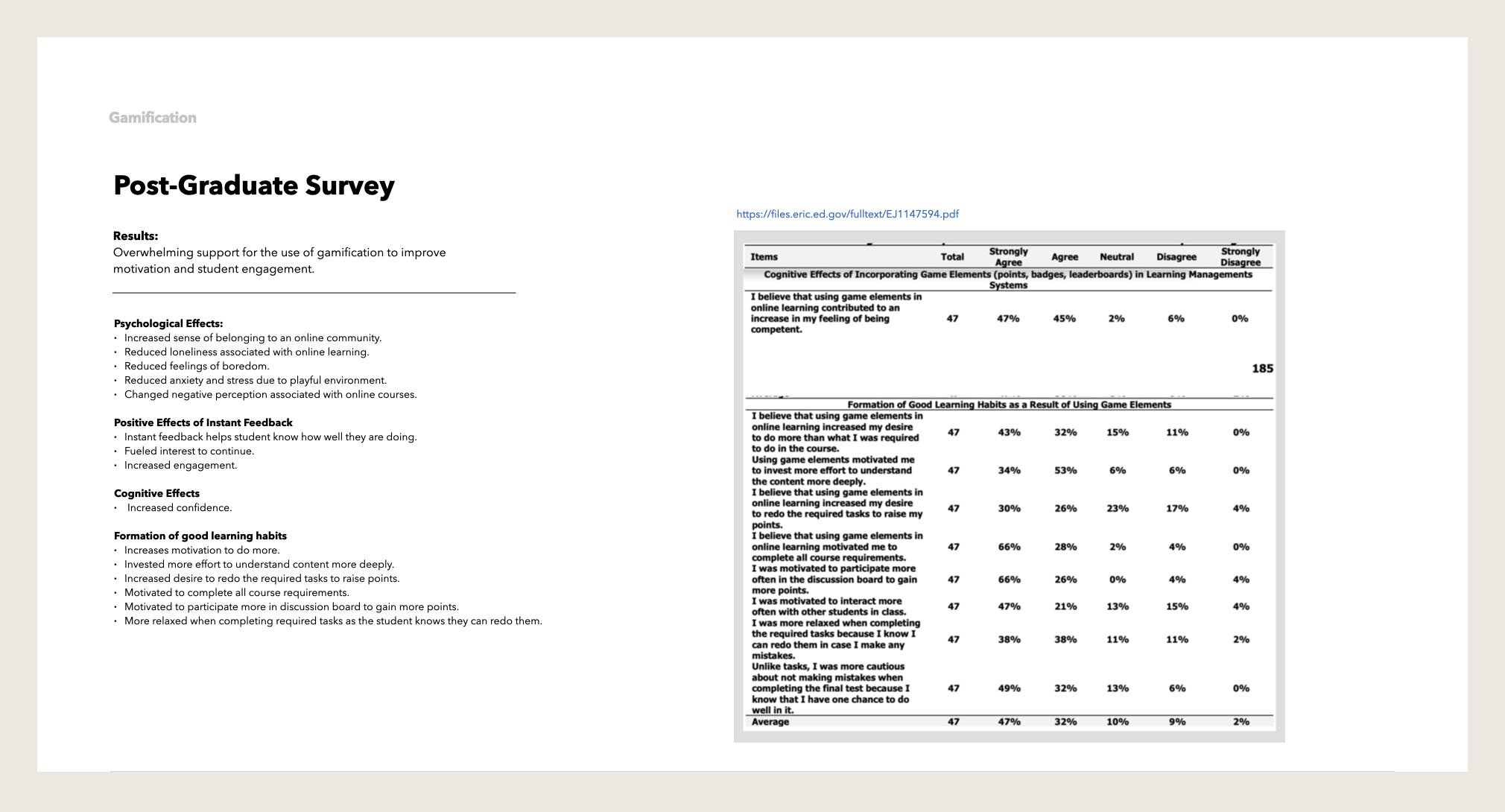

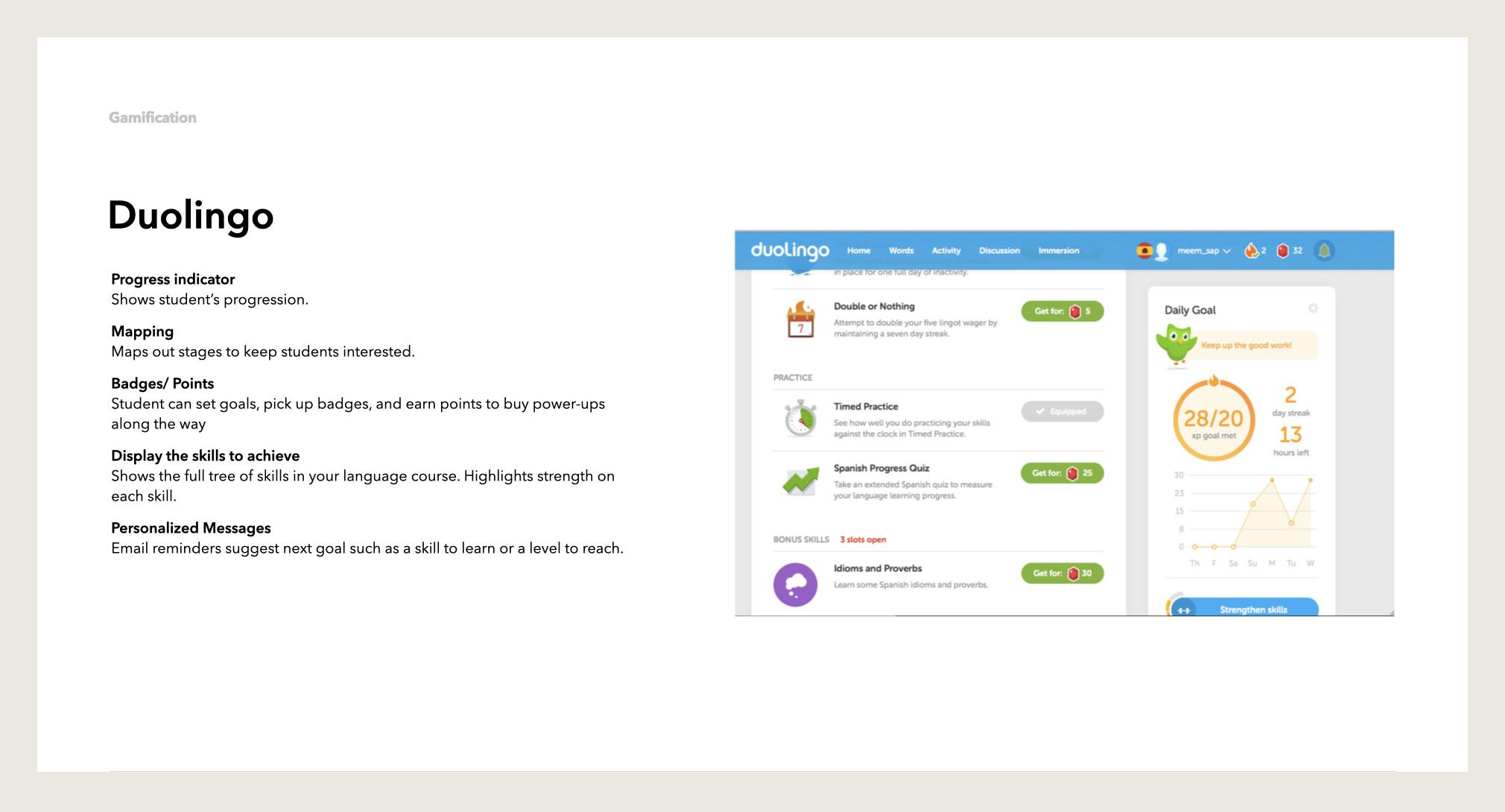

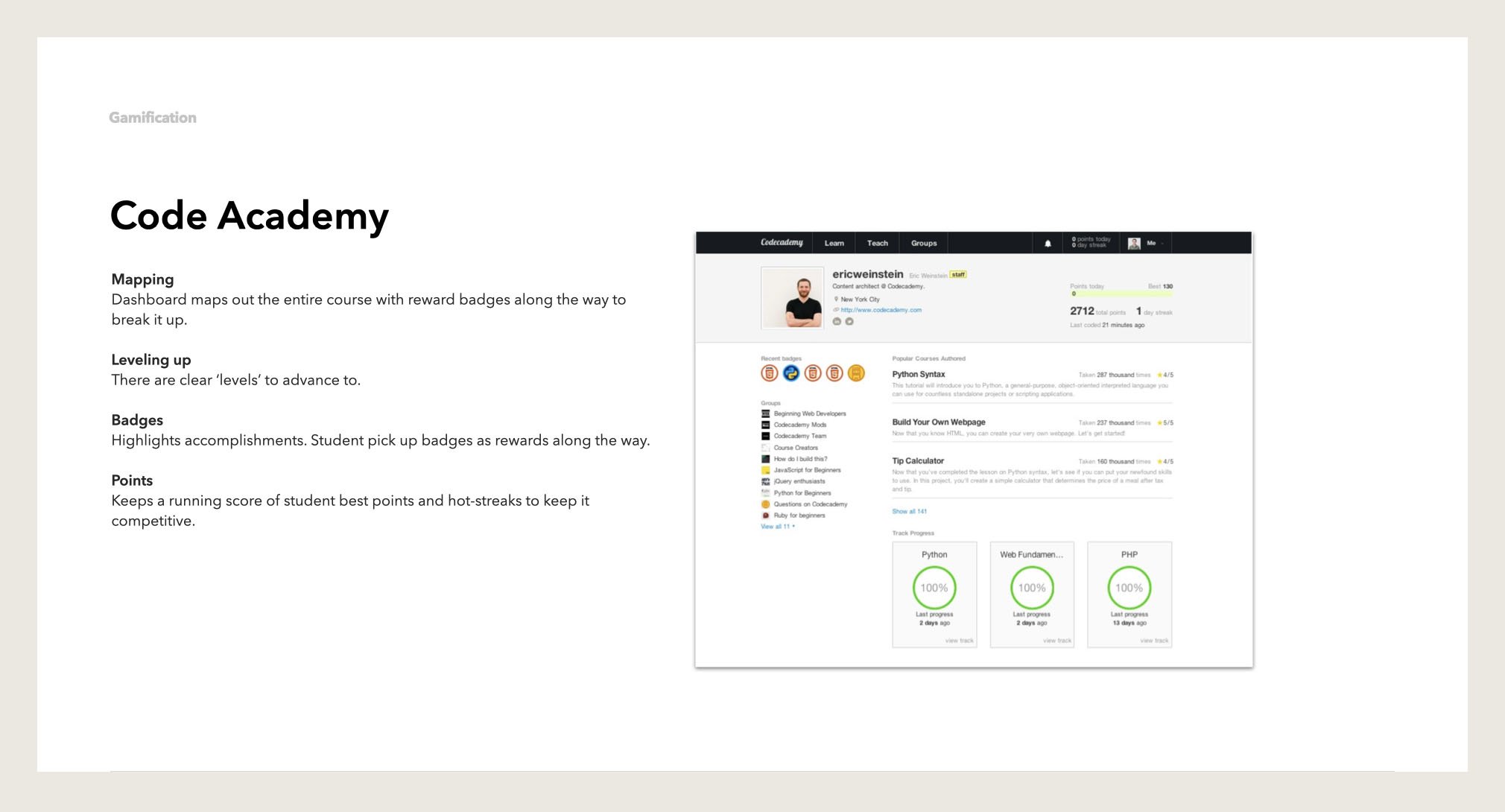

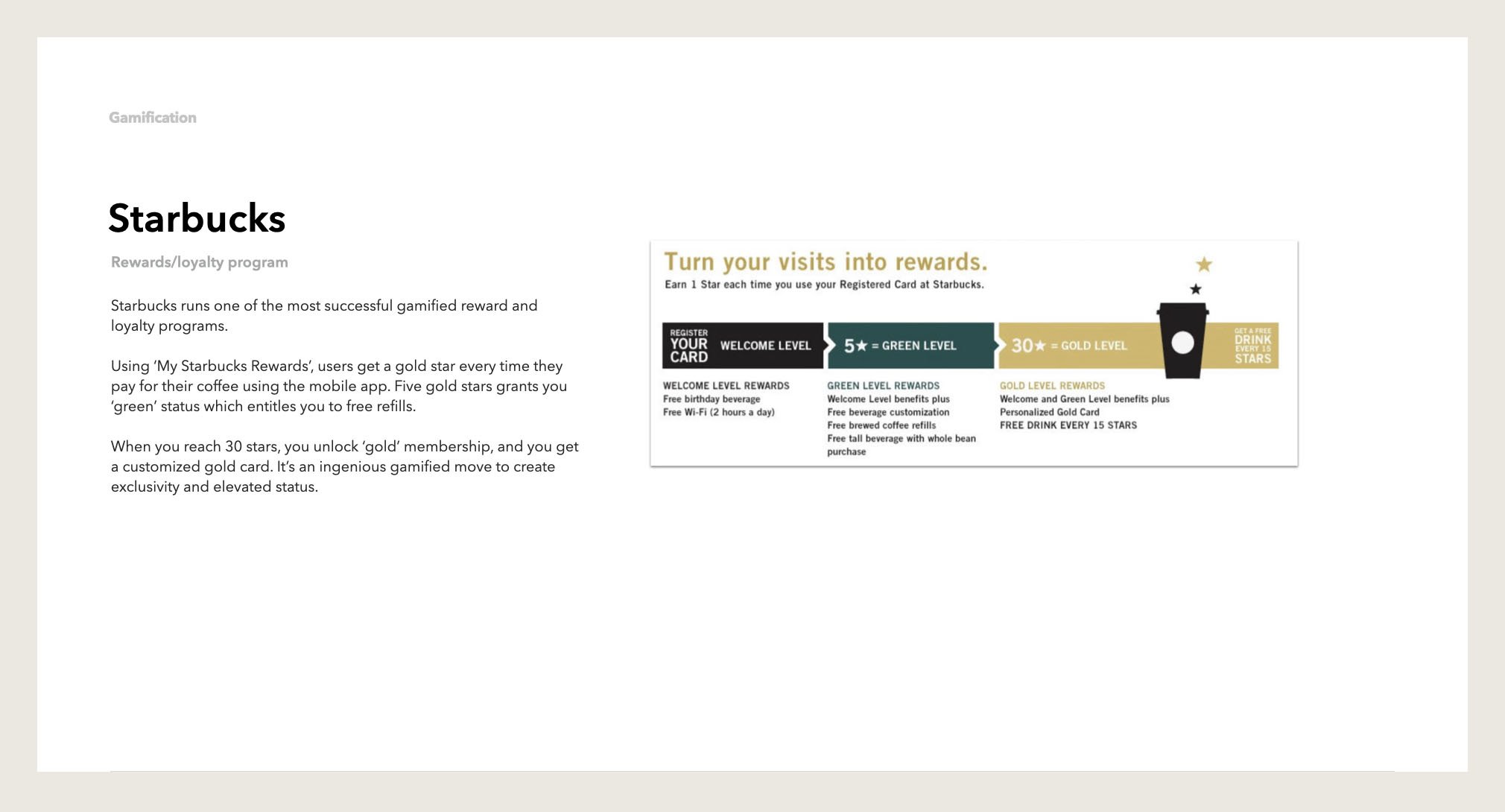

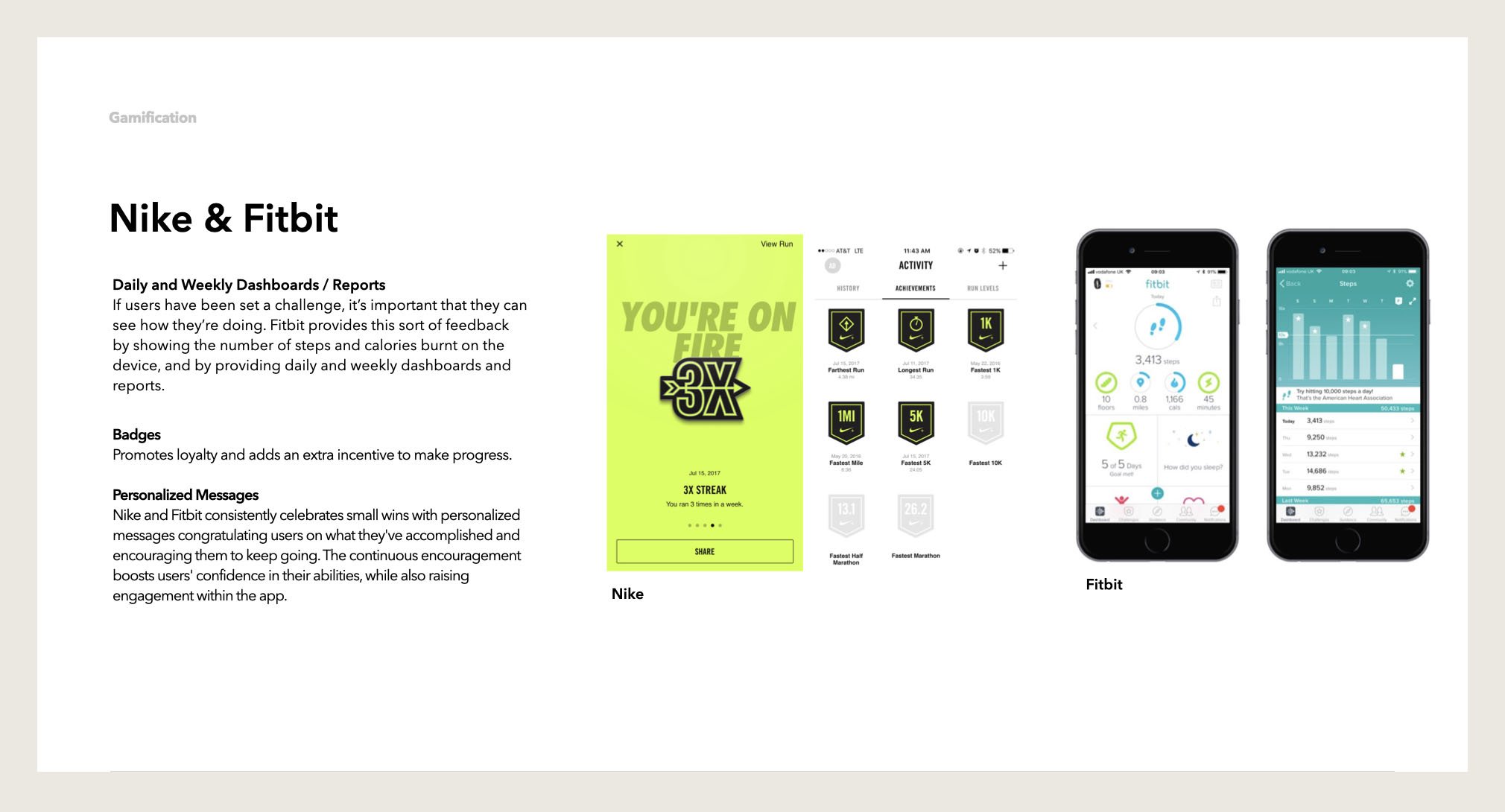

After meeting with stakeholders, conducting a comprehensive review of the application, and gaining a thorough understanding of its functionality, I initiated my research phase. I began by exploring contemporary trends in EdTech and subsequently narrowed my focus to the area of student feedback. I also examined how apps like Starbucks successfully utilize gamification and reward systems to enhance motivation.

INSIGHTS

The evolution of EdTech has led to innovative methods for engaging and guiding students throughout their learning journeys. From providing immediate feedback, to the utilization of gamification principles, these are some features that enhance the educational experience:

Real-time Feedback

Immediate insights on quiz or test performance help students identify and focus on weak areas.

Reward Systems

Points, badges, and leaderboards reward progress and mastery, motivating students to engage further.

Positive Affirmations

Gamified platforms often incorporate positive affirmations for correct answers or when students achieve milestones, boosting their confidence.

Encouraging Progress

Even when students get answers wrong, some gamified systems provide encouragement to try again, emphasizing the learning journey over the final score.

CREATING AN ASSIGNMENT

This user flow illustrates the instructor’s experience in creating assignments and integrating them with AI for the purpose of analyzing student submissions for common patterns.

AI: Unsupervised Learning Analysis

The platform employs unsupervised learning algorithms to analyze the incoming student code submissions. The AI detects patterns and similarities among the submissions.

Cohort Formation

Based on the analysis, the AI organizes the code submissions into cohorts. These cohorts group together submissions that share similarities in logic, structure, and potential errors or that demonstrate similar levels of understanding and skill.

Natural Language Processing (NLP)

Natural Language Processing (NLP) analyzes the student submissions within each cohort and generates insights into common patterns, mistakes, or areas for improvement. It can then articulate these findings in clear, human-like language that the instructor can use to provide targeted feedback to each cohort. This allows instructors to offer more personalized and relevant responses, saving time while providing higher quality feedback.

USER TESTING

I was tasked with executing swift, guerrilla-style user testing within a constrained timeline and budget. In this study, I enlisted students from Georgia Tech, where Sense instituted a pilot program. In moderated user testing sessions, they provided critical feedback on the initial design concept, which fueled a series of iterative refinements.

“I would like to see my status regarding the completion of the course.”

“I do not understand the significance of the colored bar next to the code.”

”I like the positive tone - I would have been disappointed with an 80, but was not discouraged.”

MINIMUM VIABLE PRODUCT (MVP)

The final MVP prototype was built after 3 months of definition, discovery, testing and iterations. These screens represent the experience for both the Instructor and the Student application.

INSTRUCTOR: Assignment

AI employs rules and queries to detect patterns, categorizing submissions into logical groups based on similarities in problem-solving approaches.

Prioritization levels (low, medium, high) are assigned to each criterion, guiding the AI in data weighting when running the model.

The instructor provides feedback at the group level, providing one comprehensive set of comments per group, enhancing and streamlining the evaluation process.

INSTRUCTOR: Dashboard

The dashboard presents a comprehensive view of all student assignments, offering educators a strategic snapshot that includes the volume of submissions over time, coupled with insights into overall student performance and success rates.

Detailed assignment analytics feature the quantity of patterns and groups specified per assignment, empowering educators with the capability to refine feedback directly and export data for further evaluation or reporting purposes.

STUDENT: Assignment graded

Assignment result displays a step-by-step score breakdown, enhancing student understanding of their performance on each segment of the assignment. leading up to the final result.

Includes motivational support, coupled with direct links to pertinent resources and materials for additional learning and improvement.

STUDENT: Dashboard

The dashboard displays a snapshot of each student's assignment and tracks their progress during the semester.

Highlights specific areas of concern within each assignment for easy review.